Inside Question-Answering Systems: A Breakdown of Core NLP Architectures

Learn how to create scalable information retrieval systems through design techniques.

Introduction

In recent years, question-answering apps have sprung up with great intensity. You may find them everywhere: in contemporary search engines, chatbots, or programs that merely pull pertinent facts from vast amounts of themed material.

QA apps, as their name suggests, aim to get the most appropriate response to a text passage’s specified question. Some of the earliest techniques were simple keyword or regular phrase searches. Clearly, such methods are not ideal since a document or question could have mistakes. Furthermore, common phrases cannot find synonyms that might be quite pertinent to a certain word in a search. Consequently, particularly in the age of Transformers and vector databases, old methods were superseded by the new strong ones.

Exctractive QA

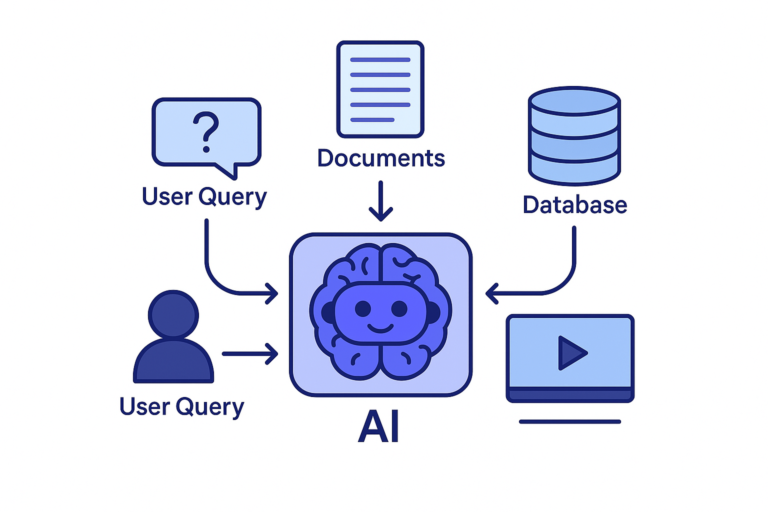

Extractive QA systems consist of three components:

- Retriever

- Database

- Reader

First, the retriever receives the question. Returning an embedding that corresponds to the query is the retriever’s aim. The retriever can be implemented in a variety of ways, ranging from straightforward vectorization techniques like TF-IDF and BM-25 to more intricate models. Transformer-like models (BERT) are typically built into the retriever. Language models can create dense embeddings that can capture the semantic meaning of text, in contrast to simplistic methods that merely use word frequency.

After obtaining a query vector from a question, it is then used to find the most similar vectors among an external collection of documents. Each of the documents has a certain chance of containing the answer to the question. As a rule, the collection of documents is processed during the training phase by being passed to the retriever which outputs corresponding embeddings to the documents. These embeddings are then usually stored in a database which can provide an effective search.

Another component known as the reader uses the original text representations of the database vectors that are most similar to the query vector to determine the answer. The reader is given an initial question, and for every one of the k documents that were retrieved, the answer is extracted from the text passage and a chance that the response is right is returned. After that, the exclusive QA system eventually returns the response with the highest likelihood.

Open Generative QA

With the exception of using a generator rather than a reader, Open Generative QA adheres to the same framework as Extractive QA. The generator does not pull the answer from a text passage like the reader does. Rather, the information in the question and text excerpts is used to construct the answer. The response with the highest probability is selected as the final response, same like in Extractive QA.

Given their striking structural similarities, the question of whether an extractive or open generative architecture is preferable may arise. It turns out that a reader model is typically intelligent enough to extract a clear and succinct response when it has direct access to a text passage that contains relevant information. However, generative models typically generate more broad and lengthier information for a specific environment. That could be useful when a question is posed in an open-ended manner, but not when a precise or succinct response is required.

Retrieval-Augmented Generation

Recently, the popularity of the term “Retrieval-Augmented Generation” or “RAG” has skyrocketed in machine learning. In simple words, it is a framework for creating LLM applications whose architecture is based on Open Generative QA systems.

The RAG retriever may include an additional step in which it attempts to determine which knowledge domain is most pertinent to a particular query if an LLM application operates across many knowledge domains. The retriever can then take various actions based on a domain that has been recognized. For instance, many vector databases that each relate to a certain topic can be used. The most pertinent information for a query is then retrieved from the vector database of the domain to which the query belongs.

Because we just go through a certain selection of papers (rather than all documents), this method speeds up the search process. Additionally, as the final retrieved context is built from more pertinent materials, it may increase the search’s dependability

Closed Generative QA

Closed Generative QA systems only use the data from the query to generate responses; they do not have access to any outside data.

Since we don’t have to sift through a vast array of external papers, the clear benefit of closed QA systems is shorter processing times. However, there are training and accuracy costs involved: the generator needs to be sufficiently reliable and have a substantial body of training data to produce relevant responses.

Another drawback of the closed generative QA pipeline is that generators are unaware of any information that may have emerged later in the data they were trained on. A generator can be retrained on a more recent dataset to get rid of this problem. However, training generators is a very resource-intensive operation because they typically have millions or billions of parameters. Adding fresh context data to the vector database is all that is required to address the same issue with Extractive QA and Open Generative QA systems, in contrast.

Applications with generic questions typically employ the closed generative technique. The performance of closed generative models tends to deteriorate for particularly restricted domains.

Conclusion

In the article I have identified three primary methods for developing QA systems in this post. They all have advantages and disadvantages, thus there isn’t a clear winner. To improve performance, it is therefore essential to analyze the input problem first before selecting the appropriate QA architecture type.

It is important to note that Open Generative QA architecture is currently a hot topic in machine learning, particularly with the advent of cutting-edge RAG approaches. RAG systems are now undergoing rapid evolution, so if you’re an NLP engineer, you should definitely keep a watch on them.